A new revolution has emerged in the realm of AI generated images area .

Previously, the focus of attention in the realm of AI generated images was on diffusion models. Within this framework, technologies like Stable Diffusion, Midjourney, and DALL-E were introduced and applied. However, the emergence of Consistency Models by OpenAI has been a game-changer. This is because diffusion models rely on an iterative generation process, resulting in slow sampling speeds that limit their potential for real-time applications. In contrast, Consistency Models can quickly generate high-quality samples without adversarial training.

As a result, Consistency Models are also known as “terminal diffusion models.” The fundamental design concept of Consistency Models is to enable single-step generation while still supporting iterative generation, zero-shot data editing, and maintaining a balance between sample quality and computation. This approach eliminates the reliance on pre-trained diffusion models and allows for independent training of Consistency Models. As such, they are positioned as a distinct class of generative models.

Consistency Models can be considered as the terminator of the diffusion model due to its high speed. When compared to the diffusion model, Consistency Models surpasses it in terms of training speed, as it can be generated in a single step. This allows Consistency Models to complete tasks at least ten times faster than the diffusion model while using 10-2000 times fewer calculations. To illustrate, Consistency Models generated 64 images with a resolution of 256×256 within a mere 3.5 seconds, averaging an impressive 18 images per second. Therefore, it is evident that Consistency Models’ unparalleled speed makes it the superior choice for image generation.

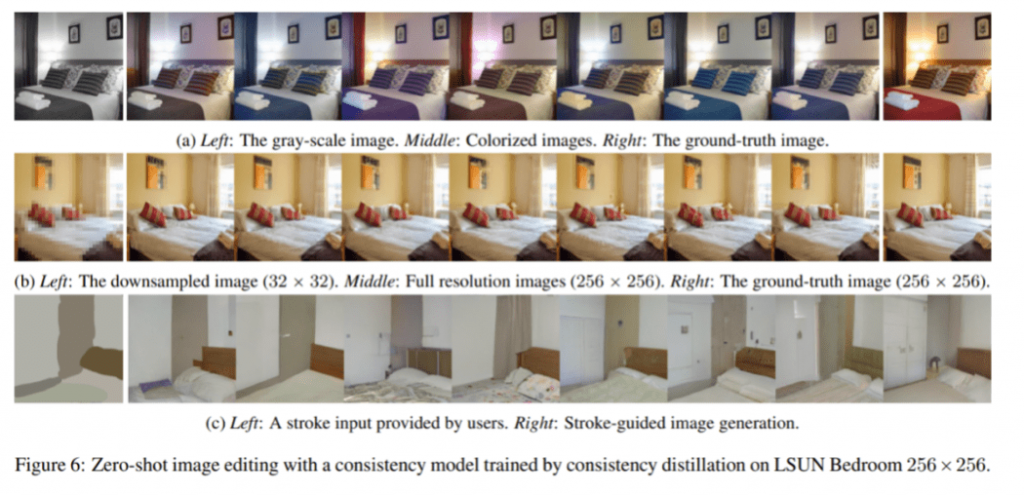

Furthermore, Consistency Models’ capabilities can also be attributed to its strength. Despite never being trained on colorization tasks, it has demonstrated natural and realistic colorization effects. With regards to zero-shot image editing, Consistency Models is capable of generating high-resolution images from low-resolution inputs, as depicted in Figure 6b. Additionally, it can satisfy specific human requirements, such as generating a bedroom with a bed and a cabinet, as shown in Figure 6c. Therefore, the strength of Consistency Models lies in its ability to perform diverse image-editing tasks with exceptional precision.

Consistency Models also excels in image restoration. The left image displays the masked image, while the middle image shows the result of the image after Consistency Models’ repair. The image on the far right serves as a reference image for comparison.

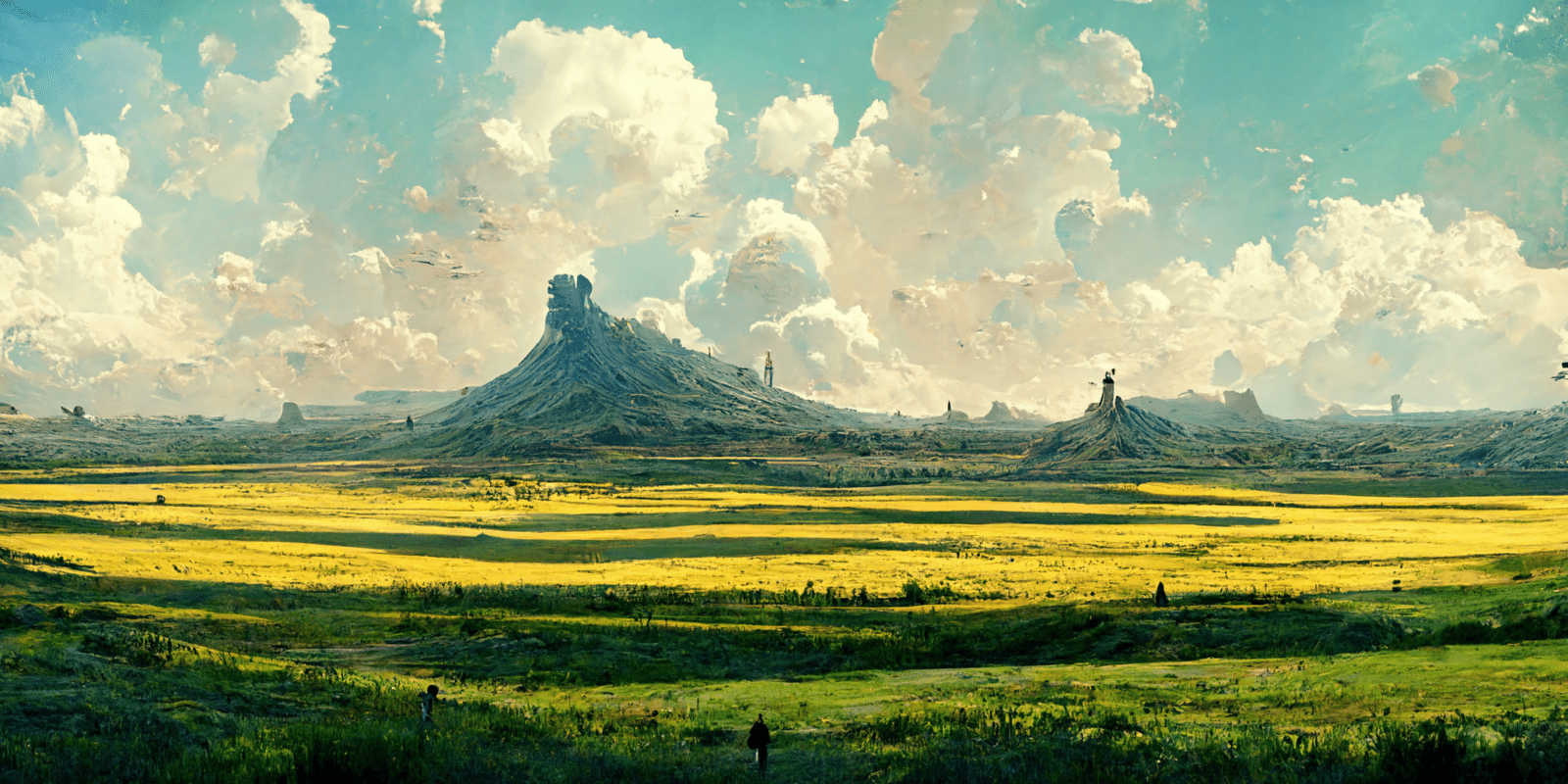

The pursuit of AI painters has always been to achieve speed, realism, and high-resolution in their paintings. While Consistency Models has recently emerged as a significant player in this field, it is worth noting that several months prior, a newcomer named JUNLALA had already made significant breakthroughs in these areas.

JUNLALA has achieved nearly zero-threshold generated images capabilities, particularly in zero-sample image editing. This breakthrough has shattered the traditional high professional barriers of AI generated images and has made it easier for users to create artwork without mastering professional control parameters. Instead, they can use their own language to create art. Compared to other popular AI generated images tools, such as Midjourney, JUNLALA’s products have significantly reduced the threshold for users to participate in creation. The pre-trained model is capable enough to adopt various painting styles and themes, eliminating the need for users to input text and allowing them to obtain an AI painting by simply trying their luck. As a result, JUNLALA’s contributions are invaluable in democratizing the field of AI painting.

JUNLALA’s professional graphics R&D team has also made significant contributions in the realm of ultra-fast image generation. They have self-developed and trained multiple AI painting models based on StableDiffusion, an open-source base model. This team has simplified the interaction process, making it possible to draw a theme painting of a specific style based on interactive instructions. As a result, users can create AI paintings with JUNLALA’s platform easily and quickly. The team’s efforts have played a vital role in advancing the field of AI painting towards greater accessibility and convenience for users.

At the level of generated images assistance, JUNLALA can understand and analyze the user’s calligraphy or painting works in real time, and provide personalized learning and improvement suggestions. This can help beginners learn calligraphy and painting, and can also assist professionals to further optimize their techniques and styles. After sufficient setting and learning, JUNLALA can complete the creative output of calligraphy and painting works.

Building upon the successes of the GAN model, JUNLALA has continued to generate highly realistic images such as real images, multi-angle portraits, and street views that have found widespread application across various industries. This technology has since been iterated into Consistency Models, with JUNLALA at the forefront of driving this innovation. It is expected that JUNLALA’s contributions will unlock even greater potential in image generation and processing, further propelling the field of AI painting towards new heights.

Consistency Models have undoubtedly revolutionized graphics processing, but this is just the beginning of what promises to be an exciting future. As models continue to evolve, their ultimate goal remains the same – to better serve customers in processing pictures, a concept that aligns perfectly with JUNLALA’s vision. Over the past seven years, JUNLALA has dedicated itself to breaking through AI image processing technology and has made remarkable progress. It is clear that JUNLALA’s extraordinary strength will continue to bring more amazing works to people in the near future. We are excited to see what lies ahead for this innovative company.